The Netherlands is aging! According to Central Bureau of Statistics in the Netherlands, in 2012 2.7 million people were aged older than 65, and by the year 2040 this number has risen to 4.7 million. One of the detrimental consequences of aging is the enormous burden on health care.

Due to the increase in the number of people over 65, a care volume increase of 4% per year is expected. Besides a huge increase in care costs, the relative number of people working in this sector will decrease also due to aging, with increasing workload as a result. One of the ways to relieve the pressure on healthcare in the coming years is the use of smart, robotic systems that can ensure that older people can continue living independently in their own homes for a longer period.

Several companies and institutions indicated in 2012 they wanted to develop knowledge in the field of essential building blocks of a medical care robot and approached the Mechatronica research groups at Avans and Saxion University of Applied Sciences to build this knowledge. In the first phase of the project, Avans and Saxion investigated 5 building blocks: compliant gripping, vision, navigation, exoskeleton with sensors and user interaction. The research was done with two starting points: the proof-of-concept solutions, that were designed to investigate the technical risks in the project, should be 1: low-cost and 2: have a modular character. The modular structure of the project enables researchers to incorporate their solutions into various platforms. Solutions can be extended beyond medical applications to industrial automation and agriculture. In the second phase of the project, this knowledge was used to build an autonomous, navigating vehicle that can recognize, locate, pick up and discard specific objects (SaxBot). In addition, several other demonstrators have been realized, one that demonstrated the functionality of microslip sensing with a twist wire actuation. These demonstrators were presented to the industrial and public during the successful, closing Mechatronics forum at Saxion.

The RAAK Pro Project “Medical Robotics” (PRO-3-26) was initiated in 2012 with the start of the applied research group Mechatronics of Saxion. RAAK PRO is a program of Stichting Innovatie Alliantie (SIA) to support universities of applied sciences (UAS) financially with their research. The research was performed by lecturers, researchers and students from the UAS’s and motivated by companies and other research institutes. Saxion started up multiple spin off projects based on the knowledge that was gained from this Medical Robotics project. The projects include automatic navigation and recharging of drones for agriculture, machine vision solutions and applications, and simultaneous localization and mapping in unknown spaces.

Research question

Project “Medical Robotics” was set up to gain knowledge about the main building blocks of a medical care robot and to combine the results of the research into working demonstrators. Five building blocks were defined based on the input of the industrial partners: compliant gripping, vision, navigation and mapping, exo-skeleton & sensors, and user interaction. The results of 3 main building blocks are explained below.

Stakeholders - Research Partners

Avans hogeschool

Website

RAM Utwente

WebsiteStakeholders - Industrial Partners

DEMCON

Website

Roessingh Research and Development

Website

Mecon

Website

Focal Meditech

WebsiteResults

Vision systems are essential in smart medical care robots. They allow the robotic platform to visualize the environment, recognize objects by shape and color and interact with the end users. The goal of the research in the building block Vision was to develop a vision system that is able to support a robotic platform to navigate through an unknown environment and allows it to recognize specific objects in this environment. The two main starting points are low-cost and modularity.

Saxion has organized a racing competition in which student teams were asked to design a miniature autonomous racing car. The car needs to be able to navigate around an unknown racing track with static obstacles. They developed a proof of concept setup after setting up systems requirements. The setup consisted of a chassis (Reely) with DC-motor, three frontal and one rear ultrasonic sensor for obstacle detection, a camera (Pixy cam) for the detection of the track border and a Raspberry Pi 3 for processing. The robotic operating system ROS (jade) was used as a software platform. This allowed for easy and modular use of multiple sensors. The location of the track borders was detected and a corrective steering order was given to redirect the racing car back on track. Students have shown with these experiments that vision in combination with ultrasonic sensors can be a good tool to be used in the final demonstrator.

Two computer science students from Saxion used stereo vision for navigating in an unknown environment. They used two webcams (Logitech C920) and triangulation to create a 3D map of the environment. This 3D map was used to determine the location of the two cameras in space. The 3D environment was created after lens correction, rectification and determination of the disparity map. They moved the stereo vision system around in a horizontal plane and finally put it back at the starting position. The data was compared with a more expensive, ROS based VI sensor (Skybotix, Switzerland) that made the same movement. As a reference the position of both systems was tracked using an OptiTrack (Flex13) system. The OptiTrack uses ten cameras to very accurately determine the position of reflecting objects in a room. They concluded that the position using two low cost webcams is possible within an accuracy of several centimeters. The synchronization of the two images of both webcams was difficult and probably causing the large deviation. The VI-sensor showed better results with respect to the reference system.

One of the demonstrators that was shown during the Mechatronics Forum was a vehicle that is able to autonomously recognize and pick up objects.

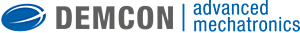

The object of interest was a red cylindrical object (75mm high, diameter 60 mm) and consisted of a combination of foam and felt. Multiple vision modules were considered to determine the location of such an object in an unknown environment. One of the options was a Kinect 360 (Microsoft), because of the lower costs and the available 3D map. The geometrical footprint of the Kinect turned out to be too large for the X80SV (DrRobot) and not usable as a camera system. Stereo vision was also considered as an option but as a result of the synchronization issues we decided to use one Logitech camera (C920) to calculate the location of the red object relative to the camera using the location in the frame. This is only possible if the red object is always located in one horizontal plane (i.e. on a floor). The height, opening angle and geometrical angle of the camera relative to the floor are known and stay constant. The computer vision library of OpenCV was used to detect the red object. The location in the frame could be determined based on the dimensions of the red object. One of the major drawbacks of using color information to detect object is the influence of the illumination on the image. A color calibration was carried out every time the system was used. In the image a typical result of the red object detection algorithm is shown. The exact location relative to the camera was determined using the center point of the object. An accuracy of less than 1 cm could be achieved which was sufficient for the purpose of detecting and localizing the red object in an unknown environment.

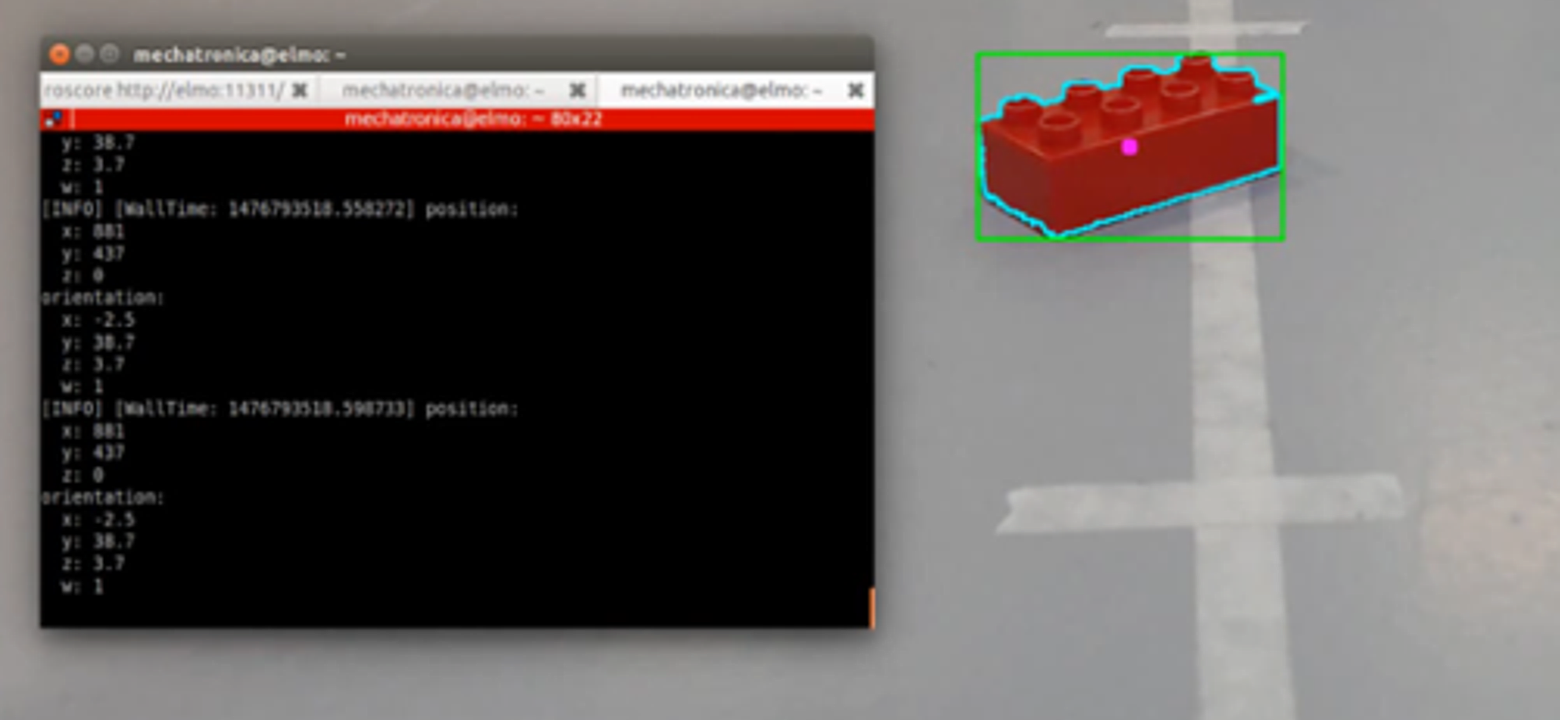

The capability to pick up objects is essential for any robot with a physical task. A ‘compliant’ gripper contains a flexibility in the drive system which enables it to pick up a range of different objects with less risk of damaging them. This happens at the expense of task speed, which is less important for a care robot than for an industrial application. A gripper can be divided into the finger(s), actuation, control and sensors. Building up knowledge on these elements and their integration has been an essential part of the project to get a 'grip on gripping'. Students have designed and built a variety of fingers with one, two or three phalanges, or fully flexible ones, and integrated these into grippers with two or three fingers. An interesting innovation was the '2.5 phalange' finger with a small non-driven flexible tip to pick up small objects. One of the results in this building block was a 'compliant gripping' test setup aimed at investigating the effects of the interaction between finger design, drive train flexibility and control algorithm on the gripping performance.

The grippers used underactuation for lower complexity and higher task flexibility. The final transmission of force from the gripper base into the fingers was mostly done with one cord and a return spring. Several principles have been built for the transmission from a (fast) rotating motor to a (slow) translation in the gripper base, like pulleys and spindle. Another principle that was studied and tested during the project is the Twisted String Actuator (TSA). This is a rotation to translation transmission twisting two (or more) strings around each other shortening end-to-end length. This resulted in two papers (E. Kivits, „Actuators with a twist,” Mikroniek, vol. 2, pp. 28-30, 2017 R. Zwikker en E. Kivits, „A transmission principle for robotic devices,” Mikroniek, vol. 2, pp. 21-26, 2017.)

In the navigation work package of the medical robotics project, the x80sv (DrRobot) mobile platform and Robot Operating System (ROS) have been used as main hardware and software development platforms, respectively. These choices were made because they allow implementation of a low-cost, open source, modular and reconfigurable navigation system. We investigated how to design and realize an autonomous navigation system, which is one of the core functionalities, besides manipulation and vision, for the demonstrator SaxBot.

The mobile platform is small, lightweight and is equipped with wheel encoders, ultrasonic and infrared sensors. During this project, additional proprioceptive/exteroceptive sensors and onboard computational processors have been included. The open source robot software platform ROS facilitates the reuse of codes with little or no adaptation, it allows the concurrent reuse of resource and distribution of computational loads. Moreover, it is supported by a large and exponentially growing community.

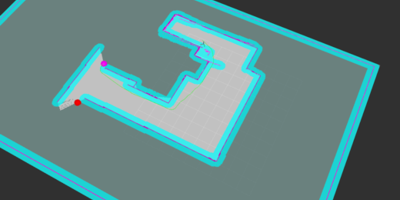

The basic aspects in autonomous navigation are the determination of the current location and the desired destination of the mobile platform in its environment, and its ability to safely navigate between these points in a possibly cluttered and unknown environment. Basic functional blocks were realized to allow the definition and (high & low) interpretation of the task, the motion and interaction control with the environment, the perception of the environment, and the (simultaneous) localization and mapping. Various hardware parts (sensors, actuators, processors) and software algorithms were used and developed to realize each functionality. The task, for example, can be defined by an operator (continually or intermittently) or another robot. This allows various control modes, ranging from a manual, direct, mode to completely autonomous in both standalone and cooperative (with another robot) operation modes. The task is used to determine the global and the local paths that the robot attempts to follow to safely navigate and get to the desired destination (Figure).

The local path is updated based on the information from the sensors, such that static and dynamic obstacles are avoided, and the target destination is reached. Various algorithms, such as unscented Kalman filter, particle filter and combined Kalman and particle filters, were used to simultaneously localize the robot and map the environment. For partially/fully known environments, Adaptive Monte Carlo Localization (AMCL) was used. Sensor fusion of multiple proprioceptive (Wheel encoder, IMU) and exteroceptive (Hokuyo and Neato laser range finders, ultrasonic and Infrared) sensors were implemented to increase robustness of the localization and mapping processes.

More information